机器之心 & ArXiv Weekly

(资料图片)

(资料图片)

参与:楚航、罗若天、梅洪源

本周重要论文包括图灵奖得主 Yann LeCun 世界模型的首项研究,以及 Meta 开源的文本生成音乐模型 MusicGen。

目录:

Self-Supervised Learning from Images with a Joint-Embedding Predictive Architecture

Adversarial Example Does Good: Preventing Painting Imitation from Diffusion Models via Adversarial Examples

Disentangling Writer and Character Styles for Handwriting Generation

INSTRUCTEVAL: Towards Holistic Evaluation of Instruction-Tuned Large Language Models

Reverse Engineering Self-Supervised Learning

VideoComposer: Compositional Video Synthesis with Motion Controllability

Simple and Controllable Music Generation

ArXiv Weekly Radiostation:NLP、CV、ML 更多精选论文(附音频)

论文 1:Self-Supervised Learning from Images with a Joint-Embedding Predictive Architecture

作者:Mahmoud Assran 等

论文链接:https://arxiv.org/pdf/2301.08243.pdf

摘要:让 AI 像人类一样学习和推理,这是人工智能迈向人类智能的重要一步。图灵奖得主 Yann LeCun 曾提出自监督 + 世界模型的解决方案,如今终于有了第一个实实在在的视觉模型 ——I-JEPA。如下图所示,I-JEPA 使用单个上下文块来预测源自同一图像的各种目标块的表征。

推荐:LeCun 世界模型首项研究来了:自监督视觉,像人一样学习和推理,已开源。

论文 2:Adversarial Example Does Good: Preventing Painting Imitation from Diffusion Models via Adversarial Examples

作者:Chumeng Liang 等

论文链接:https://arxiv.org/abs/2302.04578

摘要:本文介绍的是一篇收录于 ICML 2023 Oral 的论文,论文由位于上海交通大学的上海市可扩展计算与系统重点实验室、纽约大学和贝尔法斯特女王大学的华扬老师共同完成。论文的共同一作是即将攻读南加州大学博士学位的梁楚盟和上海交通大学的研究生吴晓宇。

推荐:给图片悄悄加上像素级水印:防止 AI「抄袭」艺术作品的方法找到了。

论文 3:Disentangling Writer and Character Styles for Handwriting Generation

作者:Gang Dai 等

论文链接:https://arxiv.org/abs/2303.14736

摘要:本文中,来自华南理工大学、新加坡国立大学、香港理工大学以及琶洲实验室的研究者们联合提出一种有趣的手写文字生成方法,仅需提供少量的参考样本即可临摹用户的书写风格,进而生成符合该风格的任意文字。

推荐:会模仿笔迹的 AI,为你创造专属字体,入选 CVPR 2023。

论文 4:INSTRUCTEVAL: Towards Holistic Evaluation of Instruction-Tuned Large Language Models

作者:Yew Ken Chia 等

论文链接:https://arxiv.org/abs/2306.04757

摘要:这么多年来,指令调优大语言模型的性能到底怎么样呢?本研究提出了一个全新的评估套件,对它们在解决问题、写作和对齐人类价值观等方面进行了全面评估,结果可能超乎你的预料。研究者在下表 3 中提供了开源指令模型的整体概述。

推荐:四年了,基础开源模型没有真正进步,指令调优大模型评估惊人发现。

论文 5:Reverse Engineering Self-Supervised Learning

作者:Ido Ben-Shaul 等

论文链接:https://arxiv.org/abs/2305.15614v2

摘要:自监督学习可以利用辅助任务(pretext)无监督数据中挖掘自身的监督信息,通过这种构造的监督信息对网络进行训练,从而可以学习到对下游任务有价值的表征。近日,图灵奖得主 Yann LeCun 在内的多位研究者发布了一项研究,宣称对自监督学习进行了逆向工程,让我们得以了解其训练过程的内部行为。

为了直观地理解 SSL 训练,下图 1 通过 UMAP 可视化展示了网络的训练样本的嵌入空间,其中包含训练前后的情况并分了不同层级。

推荐:Yann LeCun 团队新研究成果:对自监督学习逆向工程,原来聚类是这样实现的。

论文 6:VideoComposer: Compositional Video Synthesis with Motion Controllability

作者:Xiang Wang 等

论文链接:https://arxiv.org/abs/2306.02018

摘要:在 AI 绘画领域,阿里提出的 Composer 和斯坦福提出的基于 Stable diffusion 的 ControlNet 引领了可控图像生成的理论发展。但是,业界在可控视频生成上的探索依旧处于相对空白的状态。相比于图像生成,可控的视频更加复杂,因为除了视频内容的空间的可控性之外,还需要满足时间维度的可控性。基于此,阿里巴巴和蚂蚁集团的研究团队率先做出尝试并提出了 VideoComposer,即通过组合式生成范式同时实现视频在时间和空间两个维度上的可控性。

该研究在 9 个不同的经典任务上直接测试 VideoComposer 的性能,均获得满意的结果,证明了 VideoComposer 通用性。

推荐:时间、空间可控的视频生成走进现实,阿里大模型新作 VideoComposer 火了。

论文 7:Simple and Controllable Music Generation

作者:Jade Copet 等

论文链接:https://arxiv.org/pdf/2306.05284.pdf

摘要:年初,谷歌推出了音乐生成大模型 MusicLM,效果非常不错。有人称这比大火的 ChatGPT 还重要,几乎解决了音乐生成问题。近日,Meta 也推出了自己的文本音乐生成模型 MusicGen,并且非商业用途免费使用。

如下输入周杰伦《七里香》歌词中的前两句「窗外的麻雀在电线杆上多嘴,你说这一句 很有夏天的感觉」(支持中文)。

推荐:Meta 开源文本生成音乐大模型,我们用《七里香》歌词试了下。

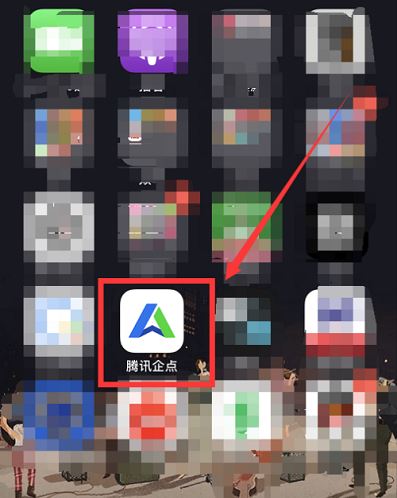

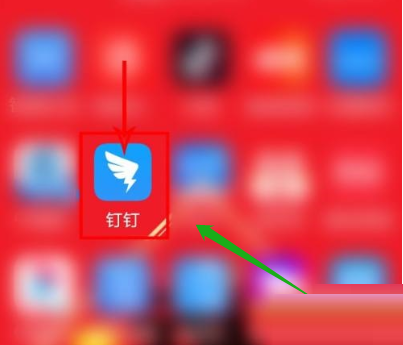

ArXiv Weekly Radiostation

机器之心联合由楚航、罗若天、梅洪源发起的ArXiv Weekly Radiostation,在 7 Papers 的基础上,精选本周更多重要论文,包括NLP、CV、ML领域各10篇精选,并提供音频形式的论文摘要简介,详情如下:

本周 10 篇 NLP 精选论文是:

1. Can Large Language Models Infer Causation from Correlation?. (from Bernhard Schölkopf)

2. Developing Speech Processing Pipelines for Police Accountability. (from Dan Jurafsky)

3. SqueezeLLM: Dense-and-Sparse Quantization. (from Michael W. Mahoney, Kurt Keutzer)

4. Morphosyntactic probing of multilingual BERT models. (from Noah A. Smith)

5. ChatGPT for Us: Preserving Data Privacy in ChatGPT via Dialogue Text Ambiguation to Expand Mental Health Care Delivery. (from Kai-Wei Chang, Majid Sarrafzadeh)

6. Language models are not naysayers: An analysis of language models on negation benchmarks. (from Timothy Baldwin)

7. Modality Adaption or Regularization? A Case Study on End-to-End Speech Translation. (from Jingbo Zhu)

8. Xiezhi: An Ever-Updating Benchmark for Holistic Domain Knowledge Evaluation. (from Rui Xu)

9. Word sense extension. (from Lei Yu)

10. Instruction Tuned Models are Quick Learners. (from Chitta Baral)

本周 10 篇 CV 精选论文是:

1. Multi-Modal Classifiers for Open-Vocabulary Object Detection. (from Andrew Zisserman)

2. AVIS: Autonomous Visual Information Seeking with Large Language Models. (from Kai-Wei Chang, Cordelia Schmid)

3. SMC-UDA: Structure-Modal Constraint for Unsupervised Cross-Domain Renal Segmentation. (from Rama Chellappa, Xinbo Gao)

4. Aladdin: Zero-Shot Hallucination of Stylized 3D Assets from Abstract Scene Descriptions. (from Leonidas Guibas)

5. Adding 3D Geometry Control to Diffusion Models. (from Alan Yuille)

6. Compositor: Bottom-up Clustering and Compositing for Robust Part and Object Segmentation. (from Alan Yuille)

7. Teaching AI to Teach: Leveraging Limited Human Salience Data Into Unlimited Saliency-Based Training. (from Kevin Bowyer)

8. Instant Multi-View Head Capture through Learnable Registration. (from Michael J. Black)

9. FlowFormer: A Transformer Architecture and Its Masked Cost Volume Autoencoding for Optical Flow. (from Xiaogang Wang)

10. MOFI: Learning Image Representations from Noisy Entity Annotated Images. (from Jon Shlens)

本周 10 篇 ML 精选论文是:

1. A Comprehensive Survey on Applications of Transformers for Deep Learning Tasks. (from Witold Pedrycz)

2. Inductive Linear Probing for Few-shot Node Classification. (from Huan Liu)

3. Virtual Node Tuning for Few-shot Node Classification. (from Huan Liu)

4. Understanding How Consistency Works in Federated Learning via Stage-wise Relaxed Initialization. (from Dacheng Tao)

5. Extending Kernel PCA through Dualization: Sparsity, Robustness and Fast Algorithms. (from Johan A. K. Suykens)

6. Variational Positive-incentive Noise: How Noise Benefits Models. (from Xuelong Li)

7. Privacy Preserving Bayesian Federated Learning in Heterogeneous Settings. (from Joydeep Ghosh)

8. One-for-All: Generalized LoRA for Parameter-Efficient Fine-tuning. (from Eric Xing)

9. Identification of Nonlinear Latent Hierarchical Models. (from Eric Xing)

10. Composing Efficient, Robust Tests for Policy Selection. (from Peter Stone)

关键词: